By Adam Brin

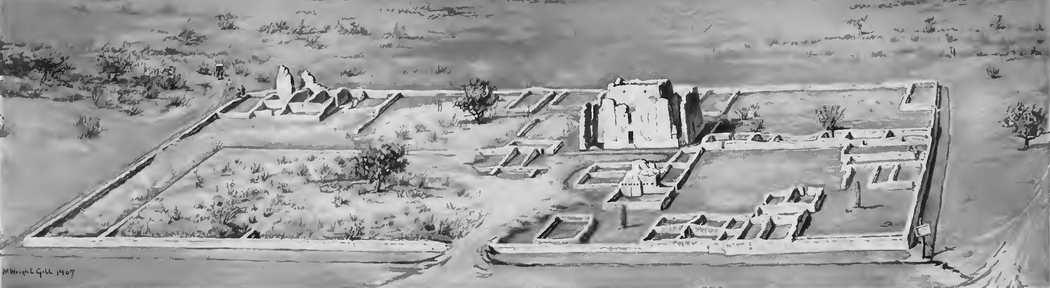

For the DAHA Project we tested various NLP systems including NLTK, Stanford, GATE, and Apache OpenNLP. To evaluate them, we extracted a 10-page section of “FRANK MIDVALE’S INVESTIGATION OF THE SITE OF LA CIUDAD” by David R. Wilcox (tDAR # 4405), and ran the text through each of the tools at their base settings, and then through a tool that stripped out artifacts from the Object Character Recognition (OCR) process. We then grouped and ranked the results comparing them to a baseline run by a person. Each unique result was validated and counted as valid or invalid, using the human recognition as the baseline, though in a few cases, the human missed values, or miscounted values. The result was a mostly quantitative analysis of the different engines and an attempt to rate the quality of each system.

| Institution | Person | Location | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Engine | found | valid | invalid | found | valid | invalid | found | valid | invalid |

| human | 35 | 32 | 3 | 62 | 62 | 0 | 27 | 22 | 4 |

| stanford | 43 | 23 | 18 | 69 | 46 | 17 | 45 | 21 | 25 |

| apache | 42 | 24 | 15.5 | 29 | 25 | 3 | 31 | 11 | 19 |

| apache (2 hour training) | 42 | 24 | 15.5 | 47 | 45 | 3 | 31 | 11 | 19 |

| gate | 0 | 0 | 0 | 66 | 37 | 18 | 1 | 1 | 2 |

| NLTK | 78 | 9 | 69 | 102 | 37 | 65 | 2 | 1 | 1 |

The results showed that out of the box, the Stanford tool was definitely more accurate, although it had a number of invalid matches too. The NLTK was the most aggressive and had the most matches, and invalid matches (except for locations). The Apache toolkit did pretty well with the ratio of valid matches to invalid ones. It seemed successful enough, that a few hours were spent on determining if it could be easily trained to improve the match quality. The results with “people” were quite good. Stanford found initialized names (E. K. Smith) while the Apache tool did not. We were able to train the Apache tool quite easily to recognize this format. We also were able to train the Apache tool to identify citation references e.g. (Smith 2017) which also would improve the matches.

| Engine | Institution | Person | Location |

|---|---|---|---|

| human | 100.00% | 100.00% | 100.00% |

| stanford | 71.88% | 74.19% | 95.45% |

| apache | 75.00% | 40.32% | 50.00% |

| apache (2 hour person training) | 75.00% | 72.58% | 50.00% |

| gate | 0.00% | 59.68% | 4.55% |

| NLTK | 28.13% | 59.68% | 4.55% |

Based on the ease of training the Apache tool, and the challenge of the Stanford tool’s license, which makes it more difficult for integrating it into tDAR infrastructure, we plan on moving forward with the Apache OpenNLP toolkit. One other note on GATE was the challenge of configuring it.The results showed that the out of the box, the Stanford tool was definitely more accurate, although it had a number of invalid matches too. The NLTK was the most aggressive and had the most matches, and invalid matches (except for locations). The Apache toolkit did pretty well with the ratio of valid matches to invalid ones. It seemed successful enough, that a few hours were spent on determining if it could be easily trained to improve the match quality. The results with “people” were quite good. Stanford found initialized names (E. K. Smith) while the Apache tool did not. We were able to train the Apache tool quite easily to recognize this format. We also were able to train the Apache tool to identify citation references e.g. (Smith 2017) which also would improve the matches.

View Raw OCR Text Used in Analysis

View Raw Results